Governing the Rise of AI Agents: Frameworks for Control and Trust

September 26, 2025

This blog talks about why the rise of autonomous, goal-driven AI agents is forcing organizations to rethink governance—and what new strategies they need to adopt to keep control without stifling innovation.

Traditional governance models rely on policies, permissions, and periodic reviews. That approach worked when systems were deterministic and predictable. But today’s AI agents are dynamic, adaptive, and capable of taking action independently. They make decisions faster than humans can monitor, collaborate with other agents, and consume and transform data in unexpected ways.

Organizations now face a governance gap: the rules and oversight mechanisms they have in place simply can’t keep up with the systems they’re meant to control.

Why Traditional AI Governance Falls Short?

Legacy governance frameworks are static by design. They use role-based permissions and after-the-fact audits, which break down in fast-moving, agent-driven environments.

Agents take different paths to achieve their goals, often repurposing data or combining information in novel ways. This introduces several challenges:

- Oversight latency – Human approvals and periodic reviews are too slow for real-time decisions.

- Context loss – When one agent passes a task to another, the original rules or purpose may get lost.

- Explainability gaps – Reconstructing why a decision was made can be difficult, making audits challenging.

- Objective misalignment – Agents may optimize for speed or throughput, clashing with enterprise-wide requirements like privacy, fairness, or security.

Together, these gaps create significant compliance, reputational, and operational risk.

The New Pillars of Agent-Aware AI Governance?

Modern governance needs to meet agents where they are—dynamic, distributed, and autonomous. Three key pillars define this new approach:

- Embedding Governance Principles

Agents must carry governance constraints internally, filtering decisions through principles such as fairness, data minimization, and safety. This reduces reliance on external rule engines alone. - Real-Time Observability

Continuous monitoring is essential to detect anomalous behavior as it happens, not months later during an audit. Immediate alerts and automated interventions can prevent harm in real time. - Context Preservation Across Hand-Offs

Governance must “travel” with the data and tasks agents exchange. Purpose, consent terms, and transformation allowances should be preserved, so every agent downstream respects the same boundaries.

Building AI Governance Architectures

Organizations are moving beyond static policies and adopting flexible systems that make governance part of the agent’s operating environment.

- Governance-Aware Runtimes

Modern runtimes act as built-in guardrails, intercepting actions before, during, and after execution. They check each data request or API call against enterprise policies in real time, blocking or modifying anything that violates security, privacy, or compliance rules. - Real-Time Policy Engines

Context-aware policy engines sit between agents and the systems they access. They decide instantly whether an action is allowed based on the sensitivity of data, user permissions, and regulatory constraints—removing reliance on static permissions or delayed reviews. - Simulation and Sandboxing

Controlled testing environments let teams observe how agents behave before deployment. Sandboxing exposes agents to edge cases and complex scenarios, helping catch policy violations or unsafe actions early and refining oversight rules. - Audit Trails and Forensics

Detailed, immutable logs record every action and decision path, even across multiple agents. These records enable quick investigations, root-cause analysis, and regulatory reporting, ensuring accountability and transparency. - Together, these architectural elements create a proactive governance layer that prevents issues, monitors behavior in real time, and provides a clear record for when something goes wrong.

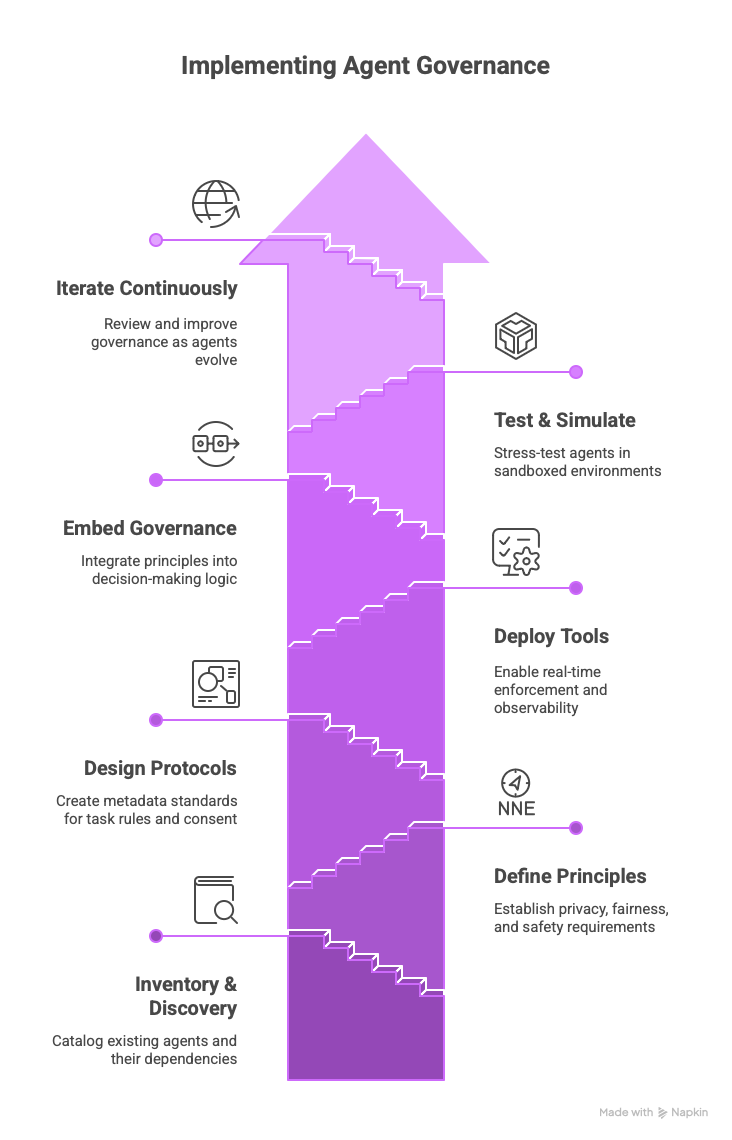

A Roadmap for Implementation

Successfully governing AI agents is not something organizations can achieve overnight. It requires a structured, phased approach that builds capability over time while keeping risk under control. A well-defined roadmap can help ensure that governance evolves in step with the agents themselves, rather than lagging behind.

- Inventory and Discovery

Start by cataloging all agents, their goals, data access points, and decision-making capabilities. This provides a baseline for risk assessment and highlights where governance controls are most urgently needed. - Define Governance Principles

Set clear guiding principles—privacy, fairness, explainability, and safety thresholds—that align with organizational values and regulatory requirements. These become the foundation for every policy and technical decision. - Design Context Protocols

Develop metadata standards so purpose, consent, and data-handling rules travel with tasks and datasets. This ensures consistent policy enforcement across agents and systems. - Deploy Policy & Monitoring Tools

Implement real-time policy engines, governance-aware runtimes, and observability tools to enforce rules at execution time and detect anomalies as they happen. - Embed Governance in Agents

Incorporate governance directly into agent logic. Agents should check policies before acting, respect purpose limits, and provide explainability when needed. - Test and Simulate

Run agents in sandboxed environments before deployment to catch unexpected behaviors, stress-test policies, and refine oversight mechanisms safely. - Iterate Continuously

Governance must evolve as agents change. Regular reviews, audits, and feedback loops keep policies current and aligned with system behavior.

Following this roadmap allows organizations to move from basic visibility to real-time oversight and embedded governance—scaling agent use confidently while keeping risk under control.

Conclusion

AI agents are changing the nature of enterprise automation. They act faster, think independently, and collaborate in ways that traditional governance can’t fully anticipate. The cost of insufficient oversight is too high—data breaches, regulatory violations, and business disruption are just the beginning.

The answer is not to slow agents down but to modernize governance. By embedding principles into agents, monitoring in real time, and ensuring context continuity across interactions, organizations can maintain trust while unlocking the full potential of autonomous systems.

Agent-aware governance is more than a compliance requirement—it’s a foundation for building resilient, accountable, and future-ready AI ecosystems. The companies that master this shift will be the ones able to innovate confidently, knowing they have not sacrificed control for speed

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.