Building Transparency and Trust in Agentic AI: The Rise of Agentic Observability

October 29, 2025

As AI agents move from experiments to enterprise-grade systems, organizations are realizing that success no longer depends only on accuracy or performance — it depends on visibility. When agents autonomously take actions, make decisions, or orchestrate workflows across tools and data sources, understanding how and why those actions occur becomes essential. This is where Agentic Observability steps in.

This blog discusses what Agentic Observability means, why it has become a critical capability for modern AI systems, how it can be implemented effectively, and what challenges and best practices enterprises should consider when adopting it. It also explores how the discipline is evolving as agent-based architectures become central to intelligent automation.

What Is Agentic Observability?

"Agentic Observability" is the practice of enabling comprehensive visibility, measurement, and governance over AI agents throughout their lifecycle — from design and testing to real-world deployment. Unlike traditional ML observability, which tracks model performance and drift, Agentic Observability focuses on multi-step reasoning, tool orchestration, memory management, and policy compliance.

Agents differ from simple predictive models because they operate autonomously, interact dynamically with users and systems, and often involve recursive decision chains. Observability, therefore, must capture not only the outputs but also the reasoning pathways, dependencies, and contextual states that drive agent behavior.

In essence, Agentic Observability = Monitoring + Explainability + Feedback Loops, purpose-built for the new generation of autonomous AI agents.

Why It’s Essential Now

The enterprise adoption of agentic AI — from autonomous assistants to operational bots — is accelerating. However, these systems bring new risks: opaque reasoning, unpredictable behaviors, and hidden dependencies between tools and data. Without robust observability, enterprises face uncertainty, compliance gaps, and operational blind spots.

Agentic Observability is essential because:

- Agents are complex ecosystems. They perform multi-step reasoning, call APIs, access memories, and collaborate with other agents — introducing layers of logic that need end-to-end traceability.

- Trust and accountability are business imperatives. Regulators and stakeholders demand transparency into AI decisions. Observability provides the audit trails and governance needed to meet those expectations.

- Continuous adaptation requires feedback. Agents evolve through prompt updates, policy refinements, and retraining. Observability ensures that real-world insights drive responsible iteration.

- Operational failures carry real-world impact. When an agent takes incorrect actions, it can disrupt workflows, miscommunicate with customers, or violate policy. Rapid root-cause analysis depends on deep observability.

In short, Agentic Observability transforms agent operations from black-box automation into transparent, auditable systems enterprises can trust.

The Foundations of an Agentic Observability Framework

A mature observability system for agents spans the entire lifecycle — design, deployment, monitoring, and iteration. Below are its foundational pillars.

1. Instrumented Design - Embed traceability from the start. Capture reasoning steps, tool invocations, context changes, and cost metrics (latency, token usage, API calls).

2. Evaluation & Testing - Run pre-deployment tests on synthetic and real scenarios to surface logic or policy gaps before going live.

3. Trust & Safety Scoring - Continuously assess actions against safety, factual accuracy, and compliance criteria.

4. Live Monitoring - Track runtime performance, drift, latency, user satisfaction, and anomaly detection in real time.

5. Hierarchical Tracing - Enable multi-layer visibility across agent chains and sub-agents, making complex interactions transparent.

6. Root-Cause Analysis - Drill down from high-level KPIs to specific reasoning steps that led to an anomaly or error.

7. Feedback Integration - Feed production insights back into design and evaluation to close the improvement loop.

8. Governance & Compliance - Maintain audit logs, guardrails, and documentation for policy adherence and regulatory review.

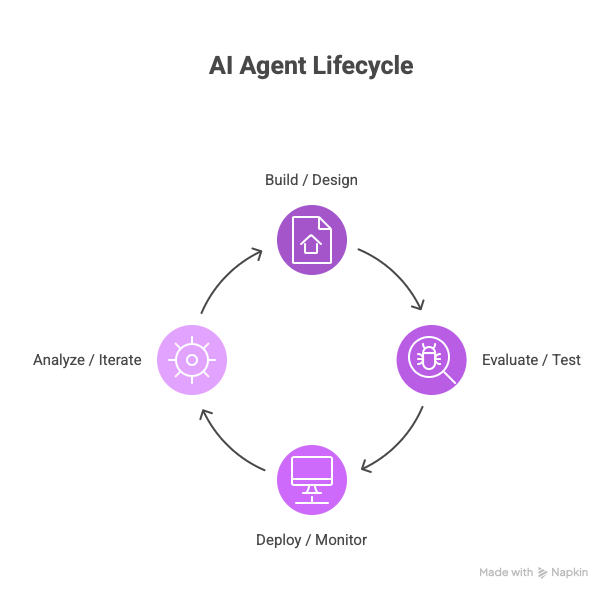

This lifecycle can be visualized across four phases:

- Build / Design – Define metrics, design traceable logic, instrument agents.

- Evaluate / Test – Compare versions, validate safety, and optimize reasoning flow.

- Deploy / Monitor – Observe behavior in production, track anomalies, and visualize performance.

- Analyze / Iterate – Identify root causes, retrain, update prompts or logic, and redeploy improved agents.

Key Challenges in Implementing Agentic Observability

While the benefits of Agentic Observability are undeniable, putting it into practice brings several engineering, operational, and organizational challenges. Each must be addressed thoughtfully to build reliable, scalable, and compliant observability frameworks.

- Scale and Volume

AI agents generate a constant stream of data — from reasoning steps and tool invocations to user interactions and telemetry logs. Capturing this high-frequency data across thousands of sessions quickly leads to massive storage and indexing requirements. Efficient data pipelines, compression, and query optimization are essential to make observability practical without overwhelming infrastructure resources. - Privacy and Security

Observability data often contains sensitive information such as user inputs, internal reasoning chains, or business-critical decisions. Mishandling this data can introduce compliance risks. Implementing data governance controls — including encryption, access policies, anonymization, and retention limits — is crucial to ensure observability does not compromise privacy or security. - Metric Definition

Unlike traditional ML systems, measuring agent performance isn’t just about accuracy or latency. Many success criteria — like helpfulness, safety, or adherence to company policy — are qualitative or context-specific. Defining meaningful metrics that balance objectivity with real-world impact remains one of the hardest challenges in agent observability. - Tool and System Integration

Modern agents interact with a diverse ecosystem of tools — APIs, databases, external knowledge sources, and sub-agents. Each component produces different types of logs and outputs, making unified visibility complex. Building an observability framework that can harmonize data across these tools requires robust standardization, schema alignment, and interoperability. - Version Drift and Consistency

As agents evolve through prompt updates, retraining, or workflow changes, their behavior — and even the meaning of metrics — can shift. Without versioned observability logic, it becomes difficult to compare performance over time or trace regressions. Maintaining synchronized version control for both agent logic and observability configuration ensures continuity and trust in insights.

These challenges underline a critical truth: Agentic Observability is not merely a tooling exercise but a design philosophy. It must be embedded into the very architecture of agent systems — balancing transparency with efficiency, compliance, and continuous adaptability.

The Future of Agentic Observability

The future of Agentic Observability is shifting from passive monitoring to active intelligence and control. Next-generation observability systems will be capable of autonomous root-cause remediation, automatically identifying issues and suggesting or even implementing corrective actions in real time. They’ll incorporate version intelligence to compare agent behaviors across updates, preventing silent regressions or unintended drifts. At the same time, AI-driven observability will leverage large language models to summarize and interpret massive volumes of telemetry data, helping teams understand complex agent behavior without wading through raw logs.

Beyond technical sophistication, the ecosystem will expand toward federated monitoring and industry-wide standardization. Enterprises operating multiple agents or business units will adopt federated frameworks that ensure visibility while maintaining data isolation and compliance. As regulation around AI transparency matures, shared schemas, APIs, and compliance standards will emerge to make observability more interoperable and auditable. Collectively, these advancements will move organizations from reactive firefighting to proactive, data-driven optimization — transforming observability into a continuous engine for agent reliability, safety, and trust.

Conclusion

Agentic Observability is fast becoming a cornerstone of trustworthy AI. As enterprises deploy autonomous agents in mission-critical contexts, transparency, traceability, and control are non-negotiable.

By embedding observability across the full agent lifecycle — from design to iteration — organizations gain not only technical insight but also business confidence. They can ensure that their agents are reliable, accountable, and aligned with human and organizational values.

In an era where agents are not just tools but collaborators, Agentic Observability is the key to keeping intelligence explainable, auditable, and safe at scale.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.

.png)