The New Architects of AI Systems: Shaping the Era of Agent Engineering

October 29, 2025

As businesses progress from the experimental stage of large language models (LLMs) and start integrating them extensively into mission-critical processes, a new professional practice has been born — Agent Engineering. This discipline is a natural progression of AI practice, where instead of constructing models, emphasis lies in designing intelligent systems that can act, reason, and interact effectively in real-world settings. Instead of looking at AI as an independent element, companies are finding ways to architect it as an integral layer through operations, customer engagement, analytics, and decision-making.

Agent Engineering isn't merely another arm of data science or application development; it's a blend of system orchestration, observability, reliability engineering, and governance based on an understanding of business logic and user requirements. Agent Engineers architect and operate AI agents that bridge models with APIs, databases, and tools so that outputs are not only contextually accurate but operationally reliable as well. Their area of specialty is balancing control with creativity: facilitating innovation while ensuring stability, compliance, and transparency at scale.

This article outlines the ways in which the emergence of Agent Engineering is reshaping enterprise AI processes. It examines the defining qualities of this discipline, why it matters more than ever, and the core practices needed to ensure agent-based systems are trustworthy and sustainable. In the end, Agent Engineers are determining the future of AI implementation — making certain intelligent systems are not only mighty but also quantifiable, controllable, and aligned with company goals.

Defining the Agent Engineer

An Agent Engineer is much more than a backend developer or prompt designer. This new role is a convergence of multiple disciplines — integrating system design, machine learning fluency, software engineering discipline, and operational reliability. Agent Engineers serve as the interoperability specialists that bridge technical sophistication with business value by making intelligent agents not only functional, but reliable, scalable, and value- aligned with enterprise goals.

They spend their time crafting complicated, multi-step AI pipelines that combine large language models with APIs, databases, and domain-centric business logic. They create orchestration frameworks that allow these agents to carry out reasoning, retrieval, and interaction over several systems in a repeatable and explainable fashion. Through laying out workflows in terms of reliability and transparency, Agent Engineers enable companies to transition from proof-of-concept prototypes to rock-solid, production-grade systems.

In addition to development, Agent Engineers integrate observability, evaluation, and compliance directly into the agent life cycle. They make sure each piece of an AI system — from prompts to outputs — can be effectively monitored, measured, and governed. This involves placing feedback loops, performance dashboards, and automated accuracy, latency, and cost-effectiveness checks.

Most importantly, Agent Engineers collaborate cross-functionally with product managers, security teams, and domain experts to take strategic business goals and turn them into quantifiable AI results. In contrast to conventional machine learning engineers, who are mostly concerned with training and tuning models, Agent Engineers are responsible for the end-to-end life cycle — from architecture and testing through deployment, monitoring, and ongoing iteration. Through this, they're creating a new era of AI systems that balance innovation and accountability.

Why the Role Is Rising Now

1. Generative AI Is Moving into Core Workflows

AI assistants are no longer side experiments. They’re becoming embedded within CRM platforms, customer-service tools, analytics systems, and knowledge management workflows. Agent Engineers enable this shift from demo to dependable system.

2. Orchestration Complexity Has Grown

Enterprise agents rarely operate in isolation. They retrieve data, invoke APIs, validate rules, and interact with external services. Managing these multi-step interactions demands robust orchestration logic, traceability, and failure handling — all core to Agent Engineering.

3. Trust, Compliance, and Risk Oversight

In sectors like finance, healthcare, and real estate, AI decisions must be transparent and auditable. Agent Engineers implement guardrails, logging, and fallback mechanisms to ensure outputs meet governance and compliance standards.

4. Need for Continuous Evaluation

Since generative models can behave unpredictably, evaluation cannot stop at deployment. Ongoing quality assessment — through automated tests, human feedback, and telemetry — helps maintain consistency, accuracy, and cost control.

5. Aligning Technology with Business Value

Agent Engineers bridge the gap between innovation and execution. They ensure that agents produce measurable value — improving productivity, decision-making, and user satisfaction rather than just showcasing novelty.

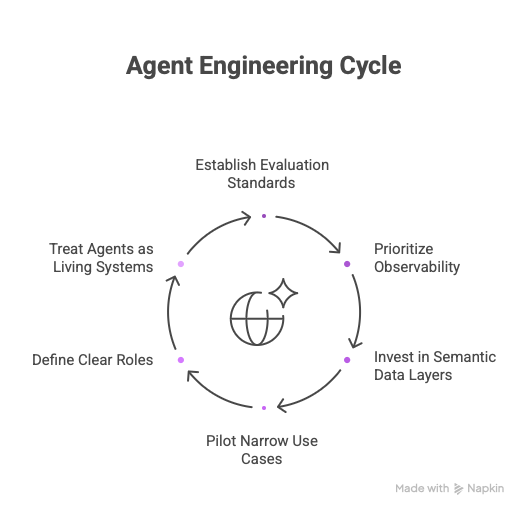

Building an Effective Agent Engineering Function

1. Start with Evaluation and Guardrails

Building an effective agent begins with well-defined evaluation standards. Establish measurable metrics such as accuracy, latency, user satisfaction, and operational cost before scaling any use case. These benchmarks ensure that performance remains transparent and traceable.

Beyond metrics, incorporate governance and compliance checks from the start. Bias detection, hallucination tests, and policy validations should be integral to your testing pipeline — not added later. Reliable AI agents are those whose behavior can be measured, audited, and refined systematically rather than adjusted through guesswork.

2. Prioritize Observability

Observability is the foundation of trust in production AI systems. Every inference, API call, or retrieval step should generate logs and traces that help diagnose issues before they escalate. Track performance metrics such as token usage, cost per request, error rates, and response patterns over time. This continuous feedback loop turns debugging into proactive improvement, allowing teams to detect drift, inefficiencies, or anomalies early in the lifecycle.

3. Invest in Semantic Data Layers

Instead of exposing agents directly to raw enterprise databases, create semantic layers that abstract and organize business logic. These curated layers enforce data consistency and reduce risks of misinterpretation. By structuring access through a controlled semantic model, Agent Engineers can ensure that the AI retrieves information accurately and in a form aligned with organizational policies and vocabulary. This improves precision, reduces hallucination, and simplifies system maintenance.

4. Pilot Narrow Use Cases with Production Discipline

The best agent engineering initiatives start small but strong. Select one well-defined, high-impact workflow — such as customer inquiry routing or data summarization — and automate it with production-grade rigor. Include version control, CI/CD pipelines for prompts, structured validation, rollback procedures, and monitoring dashboards. Treating each pilot like a full-scale deployment ensures that early learnings translate directly into scalable, maintainable systems later.

5. Define Clear Roles and Interfaces

Agent Engineering thrives when responsibilities are well-defined. Agent Engineers should work closely with AI platform engineers, compliance teams, and domain experts to ensure technical accuracy and regulatory alignment. Establish clear ownership boundaries — who designs prompts, who manages observability, who approves updates — to prevent confusion and duplication. Cross-functional collaboration built on clarity accelerates iteration and fosters accountability.

6. Treat Agents as Living Systems

AI agents are never “done.” As business logic, data structures, and model APIs evolve, agents must adapt in sync. Continuous maintenance, retraining, and versioning are vital for long-term reliability. Document every update, monitor for behavioral drift, and refresh evaluation benchmarks periodically. By treating agents as living systems rather than static products, organizations can ensure resilience, adaptability, and sustained performance in dynamic environments.

Challenges Along the Way

- Rapidly shifting technologies. The agent engineering landscape moves at breakneck speed: new model releases, updated APIs, evolving SDKs, and shifting provider SLAs can appear months or even weeks after a project launches. That rapid churn complicates long-term design decisions — what’s stable today may be deprecated tomorrow — and increases the chance of integration breakages or subtle behavioral changes when a provider updates a model. Mitigation requires designing with abstraction layers (so model or provider swaps are localized), maintaining automated compatibility tests, and dedicating small, frequent review cycles to assess upstream changes rather than reacting to them only after failures occur.

- Hidden costs. The economics of agent-driven systems are often non-intuitive. Token usage, repeated retrievals, multi-step orchestration, and extensive logging can rapidly inflate cloud and inference bills. Aggregated over many users or high-frequency workflows, small inefficiencies multiply into significant budget overruns. To control this, instrument cost telemetry at the earliest stages: track cost per API call, cost per user session, and cost per feature. Use quotas, caching, response truncation, and lower-cost fallbacks for non-critical queries. Regularly review billing with engineering and finance teams and include cost impact as a first-class metric in your KPIs.

- Trust management. Even a single misleading reply, hallucination, or policy breach can damage user trust and undo months of adoption work. Trust is fragile because agents operate in human-facing contexts where mistakes are visible and consequential. Building trust requires layered defenses: pre-response validation (business rules and whitelist/blacklist checks), confidence scoring and transparent caveats presented to users, clear fallback flows that hand control back to humans, and rapid incident response playbooks when errors are detected. In regulated domains, include human-in-the-loop checkpoints until the system has demonstrated sustained reliability.

- Maintenance load. Unlike a static software feature, agent behavior drifts as models, prompts, and data sources change. Prompts that worked well last quarter may degrade after a model update; an evolving database schema can alter retrieval accuracy. This creates an ongoing maintenance burden: prompt versioning, regression tests, evaluation re-runs, and business-rule updates must all be coordinated. Tame this by treating prompts and agent recipes as versioned artifacts in CI/CD, automating regression suites that include representative user queries, and assigning clear ownership for ongoing upkeep tied to product SLAs.

- Cultural alignment. Introducing agentic systems also introduces a change in how teams work. Stakeholders must shift from treating AI as a novelty to treating agents as collaborative teammates that require expectation management, shared workflows, and new governance norms. Resistance or misunderstanding can slow adoption, create mismatched requirements, or produce unsafe usage patterns. Address this with education (workshops, playbooks, demos), documented best practices for interacting with agents, pilot programs that pair agents with human supervisors, and feedback channels so teams can report issues and shape agent behavior.

Conclusion

Agent Engineering represents the next evolution of AI practice — one that fuses creative reasoning with production-grade rigor. As AI systems become the connective tissue of modern organizations, the Agent Engineer ensures they remain trustworthy, observable, and aligned with business goals.

In this new era, success will hinge not only on building smarter models but on engineering intelligent systems that can be trusted, scaled, and sustained. The Agent Engineer is the cornerstone of that future — the architect ensuring that intelligence meets accountability.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.

.png)