Future-Proofing AI: Scalable Governance Strategies for Ethical and Compliant AI

9 minutes

July 29, 2025

Artificial intelligence (AI) has progressed from experimental pilots to business-critical systems affecting everything from healthcare and finance to talent acquisition, marketing, and public safety. Generative AI systems now dictate digital narratives, and machine learning algorithms drive high-impact decisions—too often without transparency and accountability. As AI increasingly becomes infused in society, so are its pitfalls: invasions of privacy, systemic bias, disinformation, and uncontrolled automation in sensitive situations. For regulated industries like finance, insurance, and healthcare, these gaps in AI oversight present operational, reputational, and legal risks that can no longer be ignored.

This expanding influence brings with it increased pressure—from regulators, civil society, and the general public—on organizations to responsibly manage AI systems. Governments around the globe are implementing AI rules such as the EU AI Act and ISO 42001, while internal stakeholders call for strong protections. The task is not so much compliance as it is establishing AI ethics and governance initiatives that are proactive, scalable, and in tune with public values.

This blog discusses the changing landscape of AI governance, identifying global policy, core governance frameworks, and practical steps to implement Responsible AI throughout the AI lifecycle.

What is AI governance? Why AI Governance Matters—Now More Than Ever

What is AI governance? At its core, it's the system of policies, structures, and practices that ensure AI is developed and used in a trustworthy, ethical, and lawful manner. With AI systems now embedded across high-stakes business functions, governance has shifted from an optional initiative to a mission-critical priority. AI governance has rapidly transitioned from a niche concern to a central pillar of organizational strategy. As AI systems become more powerful and pervasive, managing their ethical, legal, and operational implications is no longer a future-facing challenge—it’s a present-day necessity. According to the 2025 IAPP / Credo AI Governance Report, 77% of organizations already using AI are actively developing governance programs to address emerging risks. What’s more telling is that 30% of organizations not yet deploying AI are proactively building governance frameworks in anticipation of future adoption. This forward-looking investment signals a growing recognition that responsible AI is not just about technology management—it's about safeguarding trust, reputation, and long-term viability.

Almost half of all organizations currently place AI governance in their top five strategic priorities, alongside digital transformation, cybersecurity, and data privacy. This is a change of heart among executives: AI is no longer seen merely as an innovation lever, but as a possible source of systemic risk that must be controlled in the same way that one controls financial or operational controls.

But this intention is not yet met with preparedness. The same report finds that just 7–8% of organizations have actually embedded governance practices into every phase of their AI development cycle—ranging from data sourcing and model design through deployment and monitoring. More alarmingly, just 4% of companies are sure they enable AI at scale in a safe and responsible manner. This disparity between intent and action is the greatest risk. In the absence of mature governance infrastructure, organizations open themselves up to model failures, regulatory infractions, reputational harm, and ethical violations.

Bridging this gap requires more than policies, it calls for organizational change, cross-functional collaboration, and systems that embed governance into every stage of the AI lifecycle. As we’ll explore in the sections ahead, the companies that act now to strengthen their governance capabilities will be best positioned to innovate with confidence and integrity in an increasingly AI-driven world.

Global Policy & Regulatory Landscape—What’s New?

The past year has marked a pivotal shift in how governments and global institutions approach AI oversight. As AI systems become embedded in sensitive domains—healthcare, security, labor markets—policymakers are moving quickly to craft governance frameworks that not only mitigate harm but foster safe innovation.

The EU AI Act: A Global Regulatory Blueprint

Enforced in 2025, the EU AI Act is the first comprehensive legal framework regulating AI across use cases and sectors. It classifies AI systems into four risk tiers—ranging from "unacceptable" (e.g., biometric social scoring, banned outright) to "high risk" (e.g., healthcare, education, law enforcement), which must meet strict requirements like transparency, human oversight, and impact assessments. The law is enforced through a mix of national authorities and third-party conformity assessments. Due to its extraterritorial impact, the Act is already influencing compliance strategies beyond Europe, especially for multinationals.

The Framework Convention on AI: Rights-Based Guardrails

The Council of Europe adopted this in 2024, the first international legally binding treaty on AI. In contrast to the EU AI Act, it prioritizes how AI should be aligned with fundamental democratic values—requiring protection against algorithmic bias, untransparent decision-making, and online disinformation. The treaty promotes cross-border cooperation, stating that ethical AI development requires transnational and cross-cultural collaboration.

The U.S. Strategy: State Momentum, Federal Stress

Though America does not have one cohesive AI law, 2025 witnessed California issuing a sweeping report threatening "irreversible harms" from unchecked AI, such as biothreats and disinformation. It made recommendations for independent audits and incident-reporting procedures. Conversely, a federal Executive Order promotes innovation-driven policies such as open-source backing and deregulation. This patchwork of regulations is confusing, particularly for businesses that cross state lines, and prompts renewed calls for nationwide synchrony.

The Global Push for Alignment

During the 2025 Shanghai AI Conference, China called for a worldwide AI regulatory body to minimize regulatory fragmentation and encourage open-source innovation with responsibility—especially for developing economies. This is a testament to increasing momentum towards harmonized frameworks, on top of the OECD AI Principles, the G7 Hiroshima Process, and the UN Global Digital Compact. With AI becoming increasingly borderless, coordinated governance is no longer an option but a must.

Foundational Governance Models & Frameworks

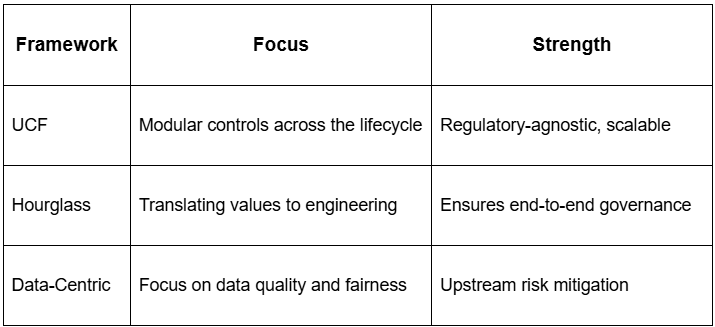

To operationalize responsible AI, organizations need more than principles—they need structured frameworks that embed governance across the AI lifecycle. Three foundational models offer practical, scalable approaches:

Unified Control Framework (UCF)

Co-developed by academia and industry in 2025, the UCF is a regulatory-agnostic governance blueprint. It standardizes risk categories (bias, explainability, safety), maps them to global regulations (EU AI Act, ISO 42001), and includes 42 modular controls covering areas like data quality, model interpretability, and human oversight. UCF helps multinationals streamline compliance across jurisdictions while maintaining ethical AI practices.

Hourglass Model

The Hourglass Model bridges the gap between high-level ethics and practical execution. It cascades organizational values (e.g., transparency, fairness) through legal, product, and engineering teams into AI development processes. Core practices—like dataset design and deployment protocols—are embedded at the model’s center, then expand into controls like audit logs and documentation. This ensures governance is consistent from vision to implementation.

Data-Centric Governance

This model embeds oversight directly in the data pipeline, addressing risks upstream. It includes dataset audits, labeling standards, provenance tracking, and dynamic testing to monitor data drift and fairness. Especially valuable in regulated sectors, this approach ensures that data quality and integrity form the backbone of ethical AI systems.

Together, these frameworks help organizations evolve from check-the-box compliance to robust, lifecycle-wide AI governance.

Core Elements of a Future-Proof AI Governance Program

As AI becomes increasingly integrated into critical systems, governance has to change from a paper policy to a dynamic, working framework. An effective AI governance program is proactive, cross-functional, and adaptive across shifting technical, regulatory, and societal terrain. These are four key pillars:

A. Ethical Principles & Trustworthy AI

Effective governance begins with moral foundations: fairness, privacy, transparency, accountability, and resilience. These principles need to be infused into the technical stack—not merely committed to mission statements.

Methods such as differential privacy (protection of data), federated learning (secure collaborative data), and explainability tools (SHAP, LIME) bring ethics into action. When such techniques are incorporated into AI construction, organizations convert principles into executable practice.

B. Governance Structure and Oversight

Governance isn't technical; it's structural. An enterprise program is future-proofed through cross-functional collaboration from legal, risk, IT, product, ethics, and executive teams.

Official roles: e.g., AI ethics officers, data stewards, governance committees, enable enforcement, watch for exceptions, and raise the issue. This makes governance not siloed but embedded within enterprise decision-making.

C. AI Inventory & Risk Assessment

A centralized AI catalog is the basis. It keeps track of all models used, their use, ownership, and related levels of risk.

Impact assessments are needed for high-risk models to assess fairness, privacy, and legality; preferably adopted from international standards such as ISO 42001 or ISO/IEC JTC 1/SC 42. Visibility enables prioritization, mitigation, and audit-readiness.

D. Monitoring & Metrics

AI oversight doesn’t end at deployment. Organizations must define and track key governance KPIs:

- Bias detection (gender, race, geography)

- Model drift (accuracy changes over time)

- Explainability scores

- Incident reporting rates

- Compliance gaps

Yet, only 18% of organizations monitor these regularly—highlighting a critical gap. Robust monitoring helps detect issues early and drives continuous accountability.

Conclusion

The road to Responsible AI is built on robust governance. It’s no longer enough to innovate—organizations must do so responsibly, ethically, and in compliance with evolving global norms. AI governance is the infrastructure that enables trust, ensures safety, and provides the backbone for long-term adoption.

By investing in adaptable AI governance frameworks today—rooted in data integrity, ethical alignment, and cross-functional oversight—enterprises gain more than regulatory clearance. They gain strategic resilience, market credibility, and societal trust. The future of AI is not just about what it can do—it’s about how responsibly we let it do it.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.

.png)