From Metrics to Minds: Rethinking Observability in the Age of AI Agents

9 minutes

August 7, 2025

Key Takeaway (TL;DR): Traditional machine learning monitoring is no longer sufficient for today's complex AI agents. The new frontier is agentic observability, which focuses on providing AI transparency by making an agent's internal reasoning, tool usage, and decision-making processes understandable. This evolution is the core of Explainable AI (XAI) for agentic systems, ensuring they are reliable, auditable, and aligned with user goals.

The Shift from Traditional ML to Autonomous AI Agents

For years, machine learning observability has been the bedrock of reliable AI, helping teams ensure their systems perform as expected. By tracking inputs, outputs, and key metrics like accuracy and latency, we could diagnose issues and improve performance. But the landscape is undergoing a seismic shift, a trend documented in many of the latest ai research papers. We are moving from static models to AI autonomous agents - sophisticated systems capable of reasoning, planning, and interacting with their environment.

These intelligent agents don't just produce predictions; they make decisions, generate thoughts, and execute multi-step actions. This complexity, a central topic in the top AI research papers of 2025, introduces an urgent need for a new paradigm built on AI transparency. Traditional methods fall short because they can't explain the why behind an agent's actions. In this guide, we'll explore the new frontier of agentic observability, answer the question "what is Explainable AI in this context?", and show you how to build a foundation for debugging and trusting the next generation of AI.

What Was the Role of Observability in Traditional ML?

In the classic machine learning paradigm, observability was essential for ensuring models were reliable and predictable. These models typically operate in a closed loop where defined inputs are processed to produce specific outputs. The primary goal of observability here was to monitor this input-output relationship and flag anomalies.

Classical ML observability, whose principles are well-documented in foundational AI papers, focused on the model layer, monitoring predictive behavior during development and production. When a model malfunctioned, the root cause was usually a traceable problem like data drift or an infrastructure error.

The main pillars of this approach included:

Performance Monitoring

This involves tracking quantitative metrics to gauge model performance. Common indicators were:

- Accuracy: The percentage of correct predictions.

- Precision and Recall: Metrics for balancing false positives and negatives.

- F1 Score: A harmonic mean of precision and recall, vital for imbalanced datasets.

- ROC AUC: A measure of a model's diagnostic ability.

Data and Concept Drift Detection

Models degrade if the data they rely on changes.

- Data Drift: Occurs when input data changes due to shifts in user behavior or external events.

- Concept Drift: Happens when the underlying relationship between inputs and outputs changes, such as shifting market preferences. Observability tools detect these shifts, enabling teams to intervene before they impact the business.

Latency and Throughput Monitoring

In production, speed is critical. Observability tracks:

- Latency: The time taken to return a prediction.

- Throughput: The number of predictions served per unit of time. These metrics are crucial for real-time systems like fraud detection engines or recommendation tools.

Error Analysis and Debugging

When predictions failed, tools like logging and Explainable AI methods (e.g., SHAP, LIME) provided the visibility needed to debug the issue by identifying feature importance.

The Evolution: From Predictive Models to Autonomous AI Agents

The evolution from predictive models to autonomous agents, a topic dominating the top research papers today, represents a fundamental change in how we build and interact with AI. These systems are no longer just passive responders; they are goal-driven problem solvers.

What is an AI Agent?

So, what is an agent in AI? An AI agent is a smart software entity that can process open-ended commands and execute tasks autonomously. These agents of AI are fundamentally different from older models. Here are the types of AI agents' core capabilities:

- Process Vague Goals: They can take a broad instruction, like "summarize this compliance document for risks," infer the user's intent, and break the problem down.

- Deconstruct and Plan: An agent in artificial intelligence performs multi-step reasoning. To create a market report, for instance, it might decide to search for recent data, process it, and structure the findings.

- Invoke Tools and APIs: Unlike traditional models, AI agents actively use external tools. They might perform a web search, access a database, or run code to fulfill a request.

- Adapt and Learn: Agents operate in stateful loops, learning from their history to refine plans and improve outcomes.

- Communicate and Cooperate: They can interact with other software, documents, or human users to gather context and optimize results.

This evolution from simple prediction to autonomous action dramatically increases AI's power but also its complexity. Failures are no longer just misclassifications but can be flawed strategies, poor tool choices, or incorrect reasoning.

Why Traditional Observability Fails for an Agent in AI

With AI agents, traditional metrics are insufficient. This gap is not just a practical problem but a major academic one, explored in depth by the latest research papers artificial intelligence ai is producing. What happens when:

- An agent chooses the wrong tool?

- It gets stuck in a repetitive loop?

- It hallucinates a context or forgets a previous action?

These are not model bugs; they are behavioral breakdowns. Answering these questions requires a new level of AI transparency that can look inside the agent's decision-making process. This is where agentic observability, a core component of modern Explainable AI (XAI), becomes essential.

As enterprises adopt these advanced systems, the ability to audit their behavior is a make-or-break requirement. Teams need to know:

- What did the AI agent think the task was?

- Why did it choose this specific plan?

- Where did its reasoning go wrong?

Agentic observability is the key to answering these questions and building truly reliable systems. If you're building systems for regulated industries, ensuring this level of auditability is non-negotiable. Learn more about how we at AryaXAI build trustworthy AI

What is Agentic Observability?

Agentic observability is the practice of monitoring, understanding, and optimizing the complete lifecycle of autonomous AI agents. It looks beyond final outputs to inspect the internal choices, reasoning sequences, and tool interactions that occur at runtime.

Unlike classical ML observability, which is result-oriented, agentic observability is process-oriented. It’s interested in the how and why of an agent's decisions. It answers critical questions that traditional monitoring cannot:

- How did the agent break down the user's goal?

- Which tools did it select and were they used effectively?

- Did it recall relevant history when making a decision?

- Where did it deviate from its intended plan?

These insights are foundational for improving agent design and building trust. Agentic observability is not just about making AI transparent; it’s about equipping teams to guide and evolve AI agents as they take on more high-stakes responsibilities, a primary focus of the latest AI alignment research.

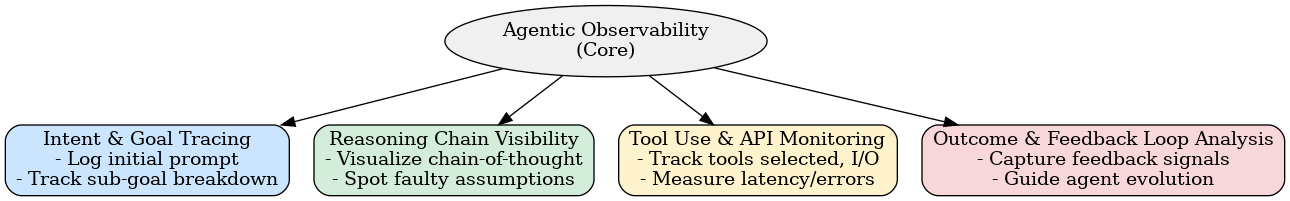

The Four Key Pillars of Agentic Observability

To operationalize observability for an agent in AI, teams need to focus on four key pillars that provide comprehensive AI transparency.

- Intent and Goal Tracing: Understand what the agent believed its task was by logging the initial prompt and tracking how it was deconstructed into sub-goals.

- Reasoning Chain Visibility: Visualize the agent's "chain-of-thought" to assess if its logic was coherent and identify where faulty assumptions or hallucinations occurred.

- Tool Use and API Monitoring: Monitor which tools were selected, the inputs and outputs for each call, and any latency or errors during execution.

- Outcome and Feedback Loop Analysis: Capture how user feedback or reward functions influenced the agent's future behavior to ensure it evolves in the intended direction.

Key Challenges in Implementing Explainable AI for Agents

While the need is clear, implementing robust agentic observability presents several challenges rooted in the dynamic nature of AI autonomous agents, challenges that many recent ai research papers are attempting to solve.

- Cross-Component Visibility: Agents operate in a complex ecosystem of foundation models, memory modules, and external tools. Gaining a cohesive view across this stack is technically demanding.

- Non-Determinism of LLMs: The probabilistic nature of the LLMs powering agents means the same input can produce different results. This makes debugging difficult, requiring analysis over multiple runs to find endemic flaws versus random errors.

- Lack of Standardized Metrics: Traditional metrics like accuracy don't apply. New benchmarks are needed to measure goal satisfaction, task efficiency, and alignment, which is still an open area of research.

- Scalability of Trace Collection: Logging detailed execution traces is resource-intensive. Scaling this data collection and analysis in real-time without creating performance bottlenecks is a major engineering hurdle.

Overcoming these challenges is critical to ensuring the reliability and trustworthiness of agentic systems. Solutions are needed to provide deep visibility without compromising performance.

The Future of Observability and AI Transparency

To support the next generation of AI agents, observability must evolve into an intelligent debugging and alignment system. The future that the top ai papers envision includes:

- Semantic Monitoring: Going beyond logging strings to understand the meaning behind reasoning steps.

- Visual Debuggers for Agents: Interactive tools that allow developers to step through an agent's reasoning process, much like code debuggers.

- Behavioral Drift Detection: Alerts that trigger when an agent AI begins to take unusual or inconsistent actions.

- Simulation and Replay: Sandbox environments to reproduce past failures and understand their root causes.

Open standards and community-driven frameworks will be vital in making these advanced tools accessible and interoperable across the AI stack.

Conclusion: From Trusting Metrics to Understanding Minds

As AI agents become central to how we work and innovate, ensuring their behavior is understandable, reliable, and aligned is non-negotiable. The old, model-centric observability stack is insufficient for the new era of autonomy.

Agentic observability, as a practical application of Explainable AI (XAI), offers a new lens—one that treats AI not as a black box to monitor, but as an intelligent agent to understand. It brings transparency in AI, accountability to its decisions, and confidence to its deployment. In this new era, observability must not only track performance—it must explain behavior. The paper that finally provides a scalable, universally adopted solution for this challenge may well become the most cited ai paper of the next decade.

Ready to bring true AI transparency and observability to your AI agents? Schedule a demo today and see how AryaXAI can help you build trust into your AI systems from day one.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.