Comparing Modern AI Agent Frameworks: Autogen, LangChain, OpenAI Agents, CrewAI, and DSPy

September 30, 2025

The ecosystem of AI agents has grown tremendously over the last two years, from single large language models (LLM) to sophisticated multi-agent systems that can reason, collaborate and orchestrate. Several frameworks have enabled this transition, with Autogen, LangChain (and LangGraph), OpenAI Agents, CrewAI, and DSPy being some of the most impactful. Each addresses the problem of constructing intelligent agents in a distinct way, as a result of varying design philosophies.

Autogen focuses on multi-agent collaboration, enabling agents to engage in dialogue with each other and with humans to solve problems collectively. LangChain, one of the most widely adopted frameworks for LLM applications, emphasizes composability—providing chains, memory, and tool integrations that make it a go-to choice for production-grade systems. However, when it comes to multi-agent development specifically, the LangGraph library (developed under the LangChain ecosystem) has become the central component. LangGraph enables developers to design agent workflows that are stateful, inspectable, and robust, making it the most widely used library today for building complex multi-agent orchestration. OpenAI Agents prioritize simplicity and deep integration within the OpenAI ecosystem, making them fast to deploy but less transparent and portable. Meanwhile, CrewAI takes inspiration from organizational workflows, allowing developers to assign agents distinct roles that coordinate like a human team. Finally, DSPy (from Stanford) takes a more research-driven approach, replacing manual prompt engineering with declarative programming and automated prompt optimization.

Together, these frameworks illustrate the diversity of approaches in the agent landscape—ranging from enterprise-friendly platforms with broad interoperability to research-oriented tools that push the boundaries of reliability and optimization. In the next sections, we compare them across six critical dimensions: usage, scalability, drawbacks, flexibility, interoperability, and ecosystem support.

1. Usage

- Autogen: Autogen created by Microsoft, highlights collaboration among multiple agents. It offers abstractions to construct agent to agent dialogue, and as such it is particularly suited for applications such as brainstorming, negotiation, & collaborative problem solving. The usage pattern is modular and conversational, reducing the entry barrier to try out multi agent workflows.

- LangChain (and LangGraph): LangChain is perhaps the most popular framework for LLM projects. Its use is centered on chains, tools, and agents, providing creators with a toolset to compose prompts, handle memory, and incorporate foreign APIs. Although LangChain is general-purpose, the LangGraph library in its toolset is now the primary building block for creating multi-agent pipelines. LangGraph allows developers to build stateful, inspectable, and resilient multi-agent interactions and it is the most common choice for advanced orchestration.

- OpenAI Agents: OpenAI’s agent framework integrates directly into the OpenAI API ecosystem. Its primary usage advantage is simplicity: developers can quickly spin up agents that use tools, retrieve knowledge, and complete tasks without heavy configuration. The downside is that it often feels black-box compared to more customizable alternatives.

- CrewAI: CrewAI focuses on collaborative agent teams, enabling structured division of labor and intuitive workflows that mimic organizational hierarchies. It allows developers to define agents with detailed backstories, roles, and goals, simplifying the creation of collaborative, task-oriented teams. A key strength is its ease of learning—CrewAI is currently one of the most beginner-friendly frameworks for multi-agent systems. In addition, it offers extensive third-party integrations (40+ tools), making it highly practical for applied use cases like research, planning, or report generation.

- DSPy: DSPy, developed at Stanford, is designed with programmatic prompt optimization in mind. Its usage emphasizes declarative programming: rather than handcrafting prompts, developers define high-level tasks, and DSPy compiles and optimizes them automatically. This makes it ideal for research, evaluation, and scenarios requiring reproducibility.

2. Scalability

- Autogen: Scales well for small- to medium-sized multi-agent systems. However, as the number of agents and interactions grows, managing state and cost becomes more complex.

- LangChain: Offers good scalability for both prototypes and production. Many production deployments rely on LangChain, but as workflows grow more complex, chains can become harder to debug and maintain.

- OpenAI Agents: Scales easily in terms of infrastructure, since it is backed by OpenAI’s cloud services. However, it ties scalability directly to the OpenAI ecosystem, which may raise cost and vendor lock-in concerns.

- CrewAI: Strong scalability for team-based orchestration. Its abstractions allow you to design scalable workflows that can simulate large organizational structures. But scaling beyond structured collaboration into more dynamic, real-time systems may require custom engineering.

- DSPy: Scalability lies in prompt optimization, not infrastructure. It can handle complex tasks efficiently because it optimizes prompts at scale, but it is not a full-stack production framework. It scales research workflows more than enterprise-grade deployments.

3. Drawbacks

- Autogen: Limited ecosystem compared to LangChain or OpenAI. Still relatively new, with evolving documentation and fewer production case studies.

- LangChain: Flexibility comes at the cost of complexity. Chains can quickly become verbose and difficult to maintain without careful engineering discipline.

- OpenAI Agents: Vendor lock-in is a major concern. The framework prioritizes ease-of-use but offers limited transparency and portability across different LLM providers.

- CrewAI: Still maturing, and its specialized focus on team-based orchestration means it may be less general-purpose than alternatives.

- DSPy: Best suited for academic and research use cases; less practical as a complete production framework. Steeper learning curve for developers unfamiliar with declarative programming paradigms.

4. Flexibility

- Autogen: Highly flexible in designing multi-agent interactions, but less so in plugging into diverse external ecosystems.

- LangChain: Very flexible, with strong support for custom integrations, modular chains, and varied backends. This flexibility is part of why it has seen broad adoption across industries.

- OpenAI Agents: Limited flexibility—designed to work within OpenAI’s predefined tool and API ecosystem. Best for straightforward tasks but restrictive for custom workflows.

- CrewAI: Flexible in designing role-based collaborative systems, but narrower in scope than LangChain. It is most valuable for agent teamwork rather than single-agent customization.

- DSPy: Flexible for prompt and evaluation optimization, but not designed to handle orchestration, memory management, or tool integrations at scale.

5. Interoperability

- Autogen: Moderate interoperability. Works best in Python ecosystems and integrates with OpenAI models, but lacks extensive third-party support compared to LangChain.

- LangChain: Strong interoperability with multiple LLM providers (OpenAI, Anthropic, Cohere, Hugging Face, etc.), vector databases, APIs, and deployment platforms. It is the most interoperable of the group.

- OpenAI Agents: Limited interoperability—primarily locked into OpenAI’s APIs. While plugins and tools exist, they are tailored for the OpenAI ecosystem.

- CrewAI: Interoperability is growing but still limited. Designed primarily to integrate with Python-based tools and APIs, with less breadth than LangChain.

- DSPy: Interoperability is primarily with LLM backends. It is less about integrating diverse tools and more about improving the effectiveness of model calls.

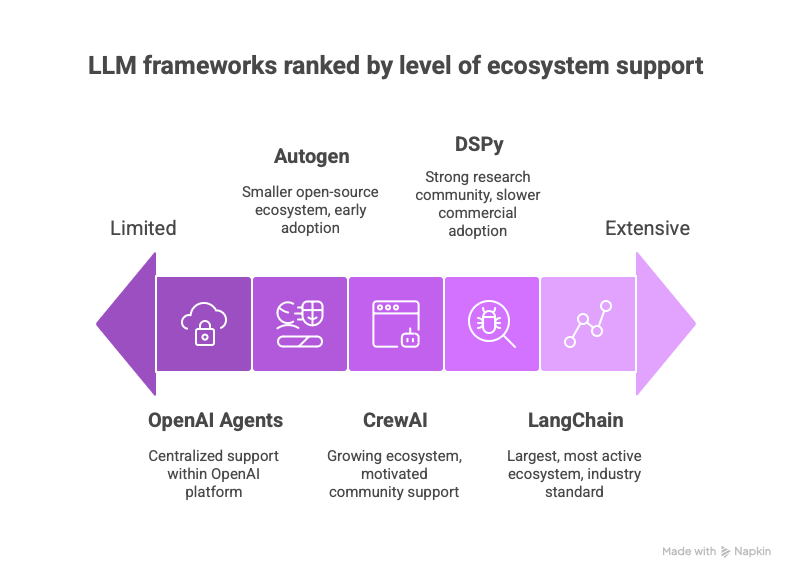

6. Ecosystem Support

- Autogen: Backed by Microsoft Research, which ensures credibility, but the open-source ecosystem is smaller. Community adoption is still in early stages.

- LangChain: The largest and most active ecosystem, with vibrant open-source contributions, extensive documentation, and strong industry adoption. It has become a de facto standard for building LLM applications.

- OpenAI Agents: Strong ecosystem support within OpenAI’s platform, including plugins, APIs, and integrations. However, support is centralized and not community-driven.

- CrewAI: Growing ecosystem with increasing interest among startups and independent developers. Still smaller compared to LangChain but supported by a motivated community.

- DSPy: Strong support within the research community, with contributions from Stanford and academics. Ecosystem adoption is slower in commercial settings, but it is respected for advancing rigorous evaluation and optimization methods.

Conclusion

Choosing the right AI agent framework depends heavily on organizational goals and priorities. Autogen is well-suited for exploring multi-agent collaboration in structured workflows, while LangChain remains the most versatile option for production-ready applications thanks to its interoperability and expansive ecosystem. OpenAI Agents provide a streamlined path to quick deployments within OpenAI’s ecosystem, though at the cost of flexibility and potential vendor lock-in. CrewAI is a strong choice for designing team-oriented systems that mirror organizational roles and collaboration, whereas DSPy appeals most to researchers and practitioners focused on optimizing prompts and evaluations rather than managing full end-to-end orchestration. Looking ahead, it is unlikely that a single framework will dominate the landscape; instead, organizations may adopt hybrid strategies that combine the interoperability of LangChain, the research rigor of DSPy, and the simplicity of OpenAI Agents. As these frameworks evolve, interoperability, governance, and reliability will become just as important as raw capabilities in shaping the future of AI agents.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.