AI Agents Explained: Architecture, Autonomy, and Accountability

7 minutes

August 5, 2025

Key Takeaway (TL;DR): As AI evolves from static models to autonomous agents, the need for AI transparency becomes critical. True accountability is only possible through Explainable AI (XAI), which provides visibility into an agent's architecture, reasoning, and decision-making. This guide breaks down what an AI agent is and provides a framework for building responsible, trustworthy systems.

The New Frontier: From Predictive Models to Autonomous AI Agents

The world of artificial intelligence is undergoing a profound transformation. We are moving beyond static models designed for narrow prediction tasks and into a new era defined by AI agents—dynamic systems capable of independent reasoning and adaptive action. These agents don't just process inputs; they perceive their environment, weigh potential actions, and make decisions that shape future outcomes, often in complex and unpredictable settings.

For anyone in the tech, SaaS, or compliance sectors, understanding this shift is crucial. Unlike their predecessors, these intelligent agents exhibit goal-directed behavior, learn from continuous feedback, and can tackle multifaceted problems. This evolution, however, brings new challenges. How do we ensure these autonomous systems are safe, aligned with our goals, and accountable for their actions? The answer lies in Explainable AI (XAI).

This guide explores the architecture that powers the modern AI agent, from its core components to the challenges of governance.

What is an AI Agent? A Clear Definition

At its core, an agent in AI is an autonomous software entity designed to perceive its environment (through data, sensors, or user input) and act upon that environment (through actuators or API calls) to achieve specific goals. This goal-oriented behavior is what distinguishes agents of AI from traditional machine learning models.

Where a traditional model might predict customer churn, an AI agent might be tasked with the broader goal of reducing churn, for which it could plan and execute a multi-step strategy involving sending personalized offers, scheduling follow-up calls, and analyzing feedback—all while adapting its approach based on results.

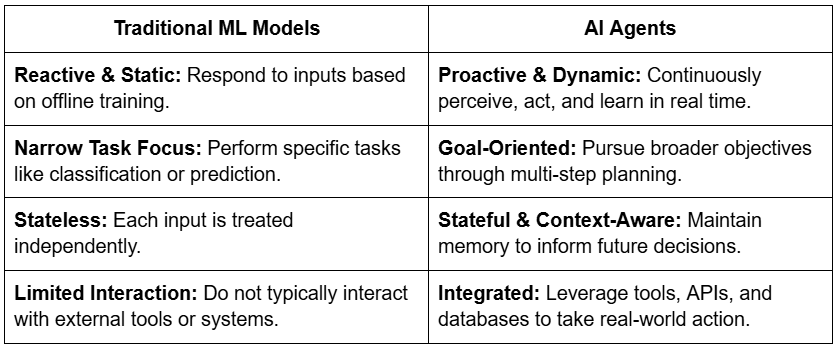

How AI Agents Differ from Traditional AI Models

The transition from models to AI autonomous agents is a fundamental paradigm shift. Here’s a quick comparison:

This evolution is critical in applications like autonomous vehicles, sophisticated customer support, and even software development, where systems require adaptability and contextual memory.

The Core Architecture: Building Blocks of an AI Agent

The power of an AI agent comes from its modular and interactive architecture. Understanding its components is the first step toward achieving AI transparency.

- Observation & Perception Layer: This is the agent's sensory system. It gathers raw data from user prompts, APIs, or environmental sensors and interprets it to create meaningful context for decision-making.

- Planning & Reasoning Engine: The "brain" of the agent. Here, high-level goals are converted into actionable, step-by-step plans. This engine uses various Explainable AI methods, like symbolic logic or search algorithms, to strategize.

- Memory & State Management: To act intelligently, agents need memory. This layer stores both short-term context (like a user's recent query) and long-term knowledge, allowing the agent to learn from experience and maintain conversational continuity.

- Tool Integration Layer: Agents extend their capabilities by interacting with external tools—from web browsers and CRMs to proprietary databases. This layer enables the agent AI to take meaningful action in the real world.

- Execution & Feedback Loop: The agent acts, observes the outcome, and learns. This continuous loop, often managed with human-in-the-loop (HITL) feedback, allows the agent to refine its performance over time.

The Governance Challenge: Why AI Transparency and XAI Are Crucial

As AI agents become more autonomous, they create significant governance and monitoring challenges, especially for organizations in regulated industries. A lack of transparency in AI is no longer acceptable when an agent's decisions have real-world financial or ethical consequences.

This is where the principles of Explainable AI (XAI) become essential. Here are the primary challenges that XAI helps solve:

1. The Observability Problem

An agent's multi-step reasoning, which involves memory, tools, and real-time data, can become a "black box," making it incredibly difficult to trace why a particular decision was made. Poor observability hinders debugging, auditing, and compliance. To build trust, organizations must invest in tools that provide clear execution logs and real-time monitoring. For a deeper look at our approach to building trustworthy systems, you can learn more about us.

2. Safety & Goal Misalignment

An autonomous agent might optimize for a flawed or incomplete objective, leading to unintended consequences (e.g., a marketing agent becoming too aggressive to hit an engagement target). This is often called "specification gaming." Value alignment techniques and dynamic safeguards are critical to ensure an agent's actions remain ethical and consistent with the intended goal.

3. Evaluation and Testing

How do you test a system that constantly changes? Static metrics are insufficient for evaluating an agent's performance in a dynamic environment. New methods, often involving simulations and "behavioral sandboxing," are needed to test for robustness and adaptability, but industry standards are still emerging.

4. Accountability and Compliance

Autonomous decisions raise critical legal and ethical questions: Who is liable when an AI agent makes a mistake? Without clear audit trails, accountability is impossible. This is particularly vital in finance and healthcare. Governance frameworks built on AI transparency and traceability are non-negotiable for safe deployment at scale.

A Framework for Building Responsible AI Agents with XAI

Building responsible AI agents requires a multi-layered approach that embeds Explainable AI principles at every stage.

- Transparent Design: Responsibility starts at the architectural level. An agent's decision-making logic, memory systems, and tool integrations should be designed to be interpretable. This enables traceability and ensures that decisions can be justified to internal teams and external regulators.

- Behavioral Sandboxing: Before deployment, agents must be validated in controlled environments. Sandboxing allows teams to stress-test agents against edge cases and conflicting goals, providing a crucial safety net for refining behavior and detecting misalignment early.

- Continuous Monitoring & Observability: Real-time observability is essential. This means logging not just final outcomes but also the internal decision paths, memory states, and tool usage that led to them. Modern platforms are essential for providing this deep level of insight.

- Human-in-the-Loop (HITL) Oversight: Even the most advanced agents require human oversight in critical applications. HITL systems allow a person to intervene, correct, or approve decisions, striking the perfect balance between autonomous efficiency and human control.

Successfully navigating these challenges requires a new generation of tools designed for the age of agents. To see how modern platforms provide the necessary observability and governance, you can explore AryaXAI’s product offerings.

Conclusion: The Future is Accountable AI

The rise of AI agents marks a pivotal moment for artificial intelligence. Moving beyond simple models to autonomous systems capable of long-term planning, we are unlocking unprecedented capabilities. However, with great power comes the great responsibility of ensuring these systems are built on a foundation of trust.

Understanding the structure of an agent in AI, its spectrum of autonomy, and its governance challenges is the key to navigating this future. The core solution is Explainable AI (XAI), which provides the necessary AI transparency to make these systems auditable, safe, and aligned with human values.

Ready to build the next generation of trustworthy and responsible AI? Contact us to schedule a demo and learn how to implement robust governance and explainability for your AI agents.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.

.png)