The Unseen KPI for AI Success: Designing for Confidence in AI Results (CAIR)

7 minutes

September 1, 2025

%20-%20AryaXAI%20Blog.png)

The dynamics of AI model adoptions are changing. Some AI products get easily adopted, while some struggle to gain trust. For an AI product to perform successfully in the marketplace, just technical sophistication is not enough. A more powerful, yet understated metric is now at work: CAIR - Confidence in AI Results, which is basically a measure of the user's faith in the output generated by an AI model. CAIR is not just another vanity metric; it is a psychological variable that can be fine-tuned and optimized to promote adoption and long-term product survivability. In this blog, we double-click on why CAIR is the "underdog" metric that dictates the fate of an AI product. We will also discuss how this critical metric can be defined, measured ,and improved through purposeful product design, in order to be able to create a more stable and successful AI solution.

How CAIR Affects Business Outcomes?

CAIR, although technically a soft metric, directly affects business outcomes. It has both - tangible and quantifiable effect on business. The two key parameters for adoption of such a tool, are fear and confidence. For maximum adoption to happen, you need low fear and high confidence. And we want to optimize for 'confidence,' as low confidence means high user dissatisfaction and low productivity. And this is where CAIR does its magic - through a simple equation that balances the value users get against the psychological barriers they face. A low CAIR means lower user adoption, lower retention, and higher user churn.

On the other hand, a high CAIR value means better user experience, increased engagement, and better retention. A high CAIR value also implies it also lessens the workload for customer support as users are less reliant on them and less likely to run into and report problems caused by lack of trust.

How To Measure the CAIR Value?

The CAIR (Confidence In AI Results) value can be measured using a simple equation. CAIR depends on three variables :

Value - The benefit users get when AI succeeds , Risk - The consequences if the AI makes and error and Correction - The effort required to fix AI mistakes.

CAIR = Value of Success/ Perceived Consequence of Error * Effort to Correct

Now, the equation highlights a key trade-off between Value and Risk. This means, the value a user perceives from the AI’s output, must be higher than the value of potential risks and effort required to fix that error.

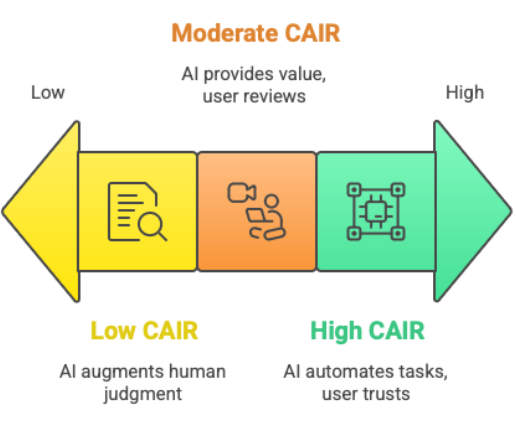

- High CAIR Value: High CAIR value AI products or tools, are the ones which make it very simple for the users to trust the AI. Such AI products or tools are designed and developed while keeping the user’s expectations and experience at the core of the concept.

For example: AI - powered writing assistant. Here the Value is high - as it helps users, brainstorm content ideas, refer to numerous sources, research trending topics and then helps you find the right topic for your niche. While the Risk is just getting a poorly written article, and the Correction effort is minimal - you can just delete the error text or ask the tool to rectify it again. - Moderate CAIR Value: Moderate CAIR value AI products or tools, on the other hand, classifies as substantial value but higher risk of error. For the moderate CAIR category, users approach the output with a certain degree of caution, in cases like these having a human in the loop, may help them feel comfortable.

For example: AI - powered medical diagnosis tool, designed to analyse X-rays, MRIs, flag potential anomalies and find patterns to assist doctors. Here the Value is high - as it helps in speeding up the diagnostic process and gives insights to the doctors, thus helping them give a more refined and correct diagnosis. But here the Risk is not as trivial as a High CAIR Value AI tool. Incorrect responses, findings or conclusions from the AI tool can delay procedures and provide incorrect treatment diagnosis. While the doctor is the final decision maker here, an incorrect output from the AI tool still introduces a meaningful risk. - Low CAIR: In high-stakes domains such as finance or medicine, the intrinsic danger of a wrong AI output is very large. In such situations, good products do not attempt to substitute human judgment. Rather, they employ AI to enhance it. A good tax software, for example, applies AI in automating entry of data and suggesting possible deductions, but the ultimate decision and verification remain with the user.

5 Principles for Optimizing CAIR Value

Optimizing CAIR is a product design challenge, not just a technical one. It requires a deliberate shift in perspective, moving from a focus on raw AI performance to a deep consideration of the user's psychological experience. The following five principles can guide product teams in building more trustworthy and ultimately more successful AI products:

- Strategic Human-in-the-Loop: Instead of trying to automate the entire pipeline completely, identify the numerous tasks for which human judgment cannot be replaced. This way the process tries to augment human capabilities. The workflow needs to have strategic human interventions, as the natural and integral part of the process itself. The AI tool will help perform repetitive and precision based tasks, at faster speeds, but a human will have the last word on key decisions.

- Reversibility: When an AI tool gives wrong results, the user's faith crashes when they are stuck with these incorrect and unexpected outputs, and thus become more hesitant to use it again. Now the perceived risk of using the tool is high. The solution -

provide users with an easy way to undo or reverse an AI action. This "safety net" does more than just undoing the errors, it changes the user’s psychological relationship with products, decreases the perceived risk of failure and leads to more playful experimentation with the capabilities of the AI. - Consequence Isolation: A single AI error should not be permitted to lead to a system-wide catastrophic failure. Design patterns to make the effect of an AI error isolated and simple to correct. For example, with a content generation tool, if one paragraph is written badly, it should be a trivial matter to redo or remove just that part, not to have to destroy the whole document. By compartmentalizing possible effects, you keep the "blast radius" of any errors smaller, and thereby increase user confidence in the general dependability of the product.

- Transparency: Be transparent and upfront about the AI's limitations and how it reached its decision. When a system makes a suggestion, an easy one such as "this is based on your previous purchase history" or "this model is 90% confident" gives important context. Such transparency efforts help build trust and break down the “black-box” image of AI. Providing source data or the decision making logic - helps users trust and understand the AI model better and gives them the right insights they need to form a decision.

- Control Gradients: Is a design principle for AI products and tools, which gives users different levels of controls based on the complexity and risk of the task, as not every task requires an equal level of AI participation. The level of control a user needs is directly proportional to the stakes(risk or complexity) of the task. Option to adjust the level of control to match the task’s requirements, makes the tool feel more intuitive and reliable. Thus helps boosting confidence in the users, because they know that they can maintain the control, when it matters the most.

How to Measure and Improve CAIR Value?

In order to optimize something you need to measure it first! Although CAIR is not a straightforward metric, it is a psychological variable - it can be approximated using quantitative and qualitative methods, thus providing a more comprehensive view of the user’s confidence and issues that needs to be addressed.

Measuring CAIR

- By using Qualitative Methods:

- Requirement Gathering: Reach out and engage directly with your ideal user group. Try to understand their current pain points, their feelings, what works for them, what puts them off - basically ask a lot of open-ended questions about their trust in AI.

- Score - Based Surveys: In order to get a quantitative idea about the user’s responses, create simple and direct score-based questionnaires. For example: “How confident are you in the result provided by the AI tool?”

- Experience - Based Testing: Have users interact with the AI product or tool - and observe them use it firsthand, understand their experience, what confused them, where did they pause or backtrace or felt unsure.

- By using Quantitative Methods:

- Track Behaviour: Observe and monitor metrics that show low user confidence. This includes:

- Edits and Discards: The frequency of edits, modifications, and discards made by the user to the AI generated content - in order to get their expected output.

- "Undo" Actions: The number of times an user had to undo the AI's action, implying the frequency of wrong or irrelevant output by the AI model.

- Time on Task: Compare the time spent on a task using the AI versus performing the same task manually. An increase in time with the AI could indicate a lack of trust and extra scrutiny.

- Track Behaviour: Observe and monitor metrics that show low user confidence. This includes:

How to Track Related KPIs?

Enhancing CAIR

Once the low-confidence points have been identified, you can apply targeted improvements based on the CAIR optimisation principles.

- Introduce Confirmation Steps: Add a simple confirmation step for critical and important AI actions, for example "Are you sure?" or preview step prior to finalising the action, giving the user a chance to review and confirm.

- Improve UI Feedback: Use evident, unambiguous design elements to communicate what the AI is processing. A rotating icon, a progress bar, or even the simple text "Generating content." alleviates anxiety and keeps the user up-to-date.

- Include a "Regenerate" Button: Give users a clear and easy means of obtaining a new result. A "regenerate" or "try again" button puts them in charge and allows them to find alternatives without having to manually restart or undo the process.

- Offer Explainable AI (XAI): Where appropriate, give a quick insight into the reasoning of the AI. This might be as basic as "We recommend this from what you have searched before" or "This is a normal choice for a user with similar tastes." This openness unpuzzles the output of the AI and earns trust.

- Isolate Errors: Make your system such that an error in one AI module does not lead to an avalanche of issues. For instance, if an AI-based image filter fails, it shouldn't bring the whole photo-editing software down.

Conclusion

Given the lightning speed of the new AI product developments, the metric is no longer - “Is the AI accurate enough?” , as that is the bare-minimum requirement now, in order to push your products, so it is no longer the defining metric. Also, one needs to consider that the users are being bombarded with hundreds of such AI tools or products, so the user also has become very aware and has developed its own preferences, after using these many AI products. So the key question for you, if you are launching a new AI product - should be “Do we have a high enough CAIR value for adoption?” This shifting dynamics implies that new AI initiatives should be jointly led by product and AI teams, while giving the same value and importance to product design and experience as model tuning or accuracy for that matter. AI readiness cannot just be determined on accuracy of your tool or product anymore, your AI tool or product should be able instil confidence in users, while making sure the perceived risk or correction hesitation is not too high. The race is no longer about - which AI is the most accurate but about which AI tool users love and trust the most.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.

.png)

.png)