Building Safer AI: A Practical Guide to Risk, Governance, and Compliance

7 minutes

August 13, 2025

Artificial intelligence systems are increasingly being deployed in high-impact domains, from healthcare to finance to defense. As the capabilities of these systems grow, so too does the complexity of the risks they introduce. The urgency to build AI systems that are safe, reliable, and aligned with human values has never been greater. But AI safety isn't a singular achievement—it's a process, one that begins with clear principles and translates into grounded, operational strategies.

This blog offers a structured, field-tested blueprint to help teams build safer AI systems, drawing from practical lessons and emerging industry practices that align with modern AI governance frameworks and regulatory expectations.

From Principles to Practice: Bridging the AI Safety Gap

The discourse around AI safety often begins with universally accepted high-level principles: alignment (ensuring AI behavior matches human intent), robustness (resilience against adversarial inputs or environmental changes), fairness (preventing bias and discrimination), transparency (understanding how decisions are made), and accountability (clear ownership and responsibility for AI actions). These values serve as the ethical compass for Responsible AI development and implementation.

However, while these principles are necessary, they are not sufficient.

The real challenge lies in operationalizing these ideals—embedding them into the day-to-day design, development, deployment, and monitoring of AI systems. In most organizations, the gap between aspiration and execution is wide. Teams may agree on the importance of safety but lack the tools, processes, or expertise to implement it systematically.

To bridge this AI safety gap, organizations must shift from abstract commitments to concrete, actionable frameworks rooted in AI governance and aligned with emerging AI regulation standards. This requires:

- Mapping Principles to Technical Requirements: "Alignment" needs to be mapped into well-defined objectives, modeling user intent, and ongoing feedback loops. "Transparency" needs to lead to understandable outputs, logging mechanisms, and traceable decision pathways—pillars of any successful AI governance framework.

- Failure Mode Analysis: It is important to understand where, why, and how AI agents or intelligent agents fail. Failures can be in the form of subtle model drift, misuse of tools, biased results, looping behavior, or misaligned optimization. Organizations need to actively prepare for these situations ahead of time instead of responding to them.

- Deploying Quantifiable Safeguards: Safety measures shouldn't be merely theoretical; they have to be verifiable and quantifiable. This involves incorporating risk evaluations within development cycles, stress-testing models with oppositional inputs, and verifying outputs against ethical, legal, and organizational requirements. In regulated environments, these practices enable compliance with the changing AI regulation paradigms.

- Real-Time Monitoring and Intervention: AI agents are not static and can change after deployment. Hence, teams need real-time observability tools, human-in-the-loop escalation channels, and automatic correction or shutdown mechanisms when anomalies are encountered.

- Cross-Functional Cooperation: Incorporating safety into AI pipelines necessitates cooperation among data scientists, ML engineers, domain specialists, policy leads, and AI governance teams. Safety needs to be a collective responsibility; not locked away in compliance or audit units.

Ultimately, bridging the AI safety gap means moving beyond principles as declarations and embedding them into the fabric of how intelligent agents are designed, monitored, and governed. It’s not just about what AI should do in theory—but how it behaves in the messiness of real-world deployment. Responsible AI autonomy depends on this translation from values to verifiable systems.

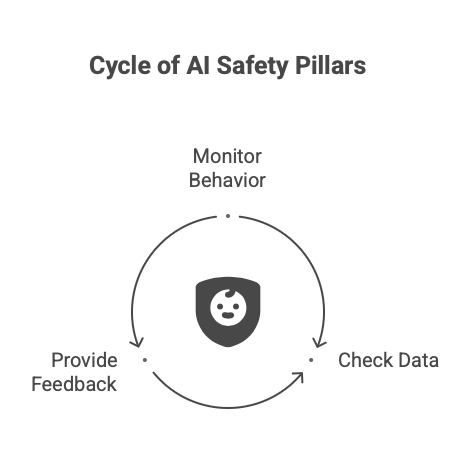

Core Pillars of Safe AI Systems

A practical approach to AI safety can be structured around three foundational pillars:

1. Behavioral Monitoring

AI models must not only perform accurately but also behave as expected in varied real-world scenarios. Monitoring behavioral drift—such as changes in output distribution, unexpected predictions, or anomalous decision patterns—is key.

Behavioral monitoring involves tracking model responses against predefined behavioral expectations. For instance, if a model is trained to detect fraud but starts flagging legitimate transactions, it indicates a misalignment that must be addressed—an essential feature of any well-designed AI governance framework.

2. Data-Centric Safety Checks

Much of what goes wrong in AI systems originates from data issues. Bias, missing values, label leakage, and distributional shifts are just some of the threats that compromise performance and safety.

Data privacy in AI is an equally critical element of this pillar. Ensuring that datasets do not contain sensitive personal information (PII) or violate privacy norms is vital for ethical and lawful deployment. This requires not only robust privacy safeguards, but also automated PII detection, rigorous label audits to catch inconsistencies or mislabeling, and ongoing analysis of distribution shifts that can degrade model performance over time.

Enterprises must validate datasets continuously for fairness, privacy, and real-world alignment—especially when models are trained on third-party or scraped data. Data-centric safety demands scrutiny across the entire ML lifecycle—from dataset design and acquisition to labeling, auditing, and deployment in dynamic environments.

3. Feedback and Interventions

Detecting issues is half the battle. Closing the loop with effective feedback systems and corrective mechanisms is equally critical.

Safe AI systems must support real-time feedback loops—whether through human-in-the-loop oversight, reinforcement learning mechanisms, or automated guardrails that halt or escalate problematic outputs. These interventions are not only good engineering practice but often essential for meeting AI ethics and compliance standards.

Operationalizing Safety: What It Looks Like in Practice

Applying AI safety in the real world requires more than theoretical ideals—it demands a concrete and systematic approach embedded into every stage of the AI lifecycle. For AI agents and intelligent agents that interact with dynamic environments, the margin for error is small, and the consequences of failure can be significant.

Operationalizing safety means treating it as a core engineering challenge—rooted in instrumentation, observability, resilience, and AI governance.

Define Safety Metrics Early:

While precision and recall are standard in performance evaluation, they offer an incomplete picture. For safety-critical systems, organizations must incorporate metrics reflecting fairness, robustness, and uncertainty. These indicators are essential components of AI governance frameworks, enabling teams to monitor not just model accuracy but also its ethical and operational impact.

Key Safety Metrics to Track:

- Output drift / behavioral anomalies: To detect deviations in model behavior over time.

- Fairness across protected attributes: Measuring parity across gender, race, geography, and other sensitive dimensions.

- Model confidence and uncertainty scores: To understand how certain the model is in its predictions—critical for risk-aware decision-making.

- Privacy leakage detection: Identifying unintended memorization or exposure of sensitive or personally identifiable information (PII).

- Incident frequency and resolution time: Tracking how often safety incidents occur and how quickly they are addressed.

By defining and monitoring these metrics from the outset, organizations can move toward proactive risk management and uphold Responsible AI standards throughout the lifecycle.

- Monitor Continuously, Not Just at Deployment: AI systems evolve post-deployment, often in unpredictable ways. Real-time observability tools are essential. Persistent visibility into AI agent behavior allows teams to catch and mitigate issues early—supporting proactive AI regulation compliance and risk management.

- Simulate Failure Scenarios: Safety demands anticipating failure, not just reacting to it. Red-teaming, adversarial testing, and stress simulations reveal vulnerabilities and blind spots before systems go live. These activities should be codified into the broader Responsible AI strategy.

- Foster Cross-Functional Collaboration: Safety must be a collaborative effort. Input from compliance, legal, and ethics teams helps ensure AI agents operate within both moral and regulatory boundaries. This cross-functional approach is the bedrock of any effective AI governance program.

Laying the Groundwork for Safer Future Systems

1. Recognize the Rising Cost of Failure

As AI systems become more autonomous and embedded into decision-making pipelines, the risks associated with failure grow significantly. Mistakes made by intelligent agents can propagate through systems, lead to incorrect decisions, and cause reputational, legal, or operational damage. In such high-stakes environments, waiting for things to go wrong and reacting later is no longer a viable strategy. Safety must be designed in from the beginning.

2. Integrate Safety from Day One

Safety engineering should be an integral part of every AI development workflow. This means incorporating risk analysis, failure mode anticipation, and ethical considerations right from the design phase. Teams should identify possible edge cases and failure paths during development, and implement safeguards before deployment. Safety is not a stage—it’s a continuous thread throughout the system’s lifecycle.

3. Leverage Emerging Tooling and Infrastructure

The tooling landscape for AI safety is rapidly maturing. Platforms like AryaXAI provide model observability infrastructure that helps teams detect issues early, track system behavior over time, and debug performance degradation with clarity. These tools enable organizations to move from reactive troubleshooting to proactive issue detection and mitigation, especially important when dealing with complex, agentic behaviors.

4. Build a Culture of Safety and Shared Responsibility

Technology alone cannot ensure safety. It must be accompanied by a culture that prioritizes responsible development and open dialogue about risks. Safety should be a shared responsibility across engineering, product, design, legal, and compliance teams. Everyone involved in AI development must feel empowered to raise concerns and contribute to creating safer systems.

5. Invest in Continuous Learning and Feedback Loops

Even the best-designed systems will encounter unexpected behavior. The key is to treat every anomaly, near-miss, or system failure as a learning opportunity. Organizations should establish structured review processes, conduct post-incident analyses, and adapt systems based on real-world performance. Safety is not static—it must evolve through feedback and iteration.

6. Commit to Long-Term Resilience and Trustworthiness

As AI agents become more autonomous and integrated into critical workflows, the demand for systems that are not only effective but also transparent, controllable, and aligned with human values will intensify. Organizations must plan for long-term resilience by designing systems that can be governed, audited, and improved over time.

Final Thoughts

Creating safer AI systems is not a one-time achievement but an ongoing commitment that evolves alongside the technology itself. As AI agents become more autonomous, embedded, and impactful, the responsibility to ensure their AI safety, reliability, and alignment becomes even more critical.

This journey requires more than ethical intentions—it demands actionable strategies, robust AI governance, proactive AI regulation readiness, and cross-functional collaboration. By grounding safety practices in real-world workflows, organizations can build intelligent systems that are not only powerful but also ethical, resilient, and trusted by the societies they serve.

SHARE THIS

Discover More Articles

Explore a curated collection of in-depth articles covering the latest advancements, insights, and trends in AI, MLOps, governance, and more. Stay informed with expert analyses, thought leadership, and actionable knowledge to drive innovation in your field.

Is Explainability critical for your AI solutions?

Schedule a demo with our team to understand how AryaXAI can make your mission-critical 'AI' acceptable and aligned with all your stakeholders.

.png)

.png)